Implicit Repair with Reinforcement Learning in Emergent Communication

AAMAS 2025

Abstract

Conversational repair is a mechanism used to detect and resolve miscommunication and misinformation problems when two or more agents interact. One particular and underexplored form of repair in emergent communication is the implicit repair mechanism, where the interlocutor purposely conveys the desired information in such a way as to prevent misinformation from any other interlocutor. This work explores how redundancy can modify the emergent communication protocol to continue conveying the necessary information to complete the underlying task, even with additional external environmental pressures such as noise. We focus on extending the signaling game, called the Lewis Game, by adding noise in the communication channel and inputs received by the agents. Our analysis shows that agents add redundancy to the transmitted messages as an outcome to prevent the negative impact of noise on the task success. Additionally, we observe that the emerging communication protocol’s generalization capabilities remain equivalent to architectures employed in simpler games that are entirely deterministic. Additionally, our method is the only one suitable for producing robust communication protocols that can handle cases with and without noise while maintaining increased generalization performance levels.

Motivation

Emergent Communication (EM) comprises the study and analysis of the evolution of human language. Normally, the framework used implements communication games, where language protocols emerge entirely as a cooperation mechanism to solve the task at hand (without any prior knowledge).

In this work, we focus on studying a particular linguistic concept called repair mechanisms. A repair mechanism is any procedure used to detect and clarify information sent by any interlocutor during a dialogue. A broad categorization divides repair mechanisms into explicit and implicit repair mechanisms. The former includes posterior dialogs that an interlocutor starts to clarify past information. Conversely, the latter mechanism happens in a subtle way where the interlocutor, conveying the original information, intentionally expresses it in such a way as to minimize misinformation and preemptively avoid posterior dialogues to repair it. As a simple example, imagine the scenario where person A and B are looking at a photo album, particularly at two photos, each containing a person. The dialogue begins as follows:

-

A: She is a writer and a photographer.

-

B <looks confused>: Which one?

-

A: The album’s author.

In this example, points 2. and 3. are considered explicit repair mechanisms. B did not understand some information and started a follow-up dialogue to clarify it. Let’s now consider a second version of the dialogue, where A says:

- A: The author of this album is also a writer.

This version of the dialogue is considered an implicit repair mechanism, where A preemptively refines and sends the information in such a way as to avoid any misinformation.

Methodology

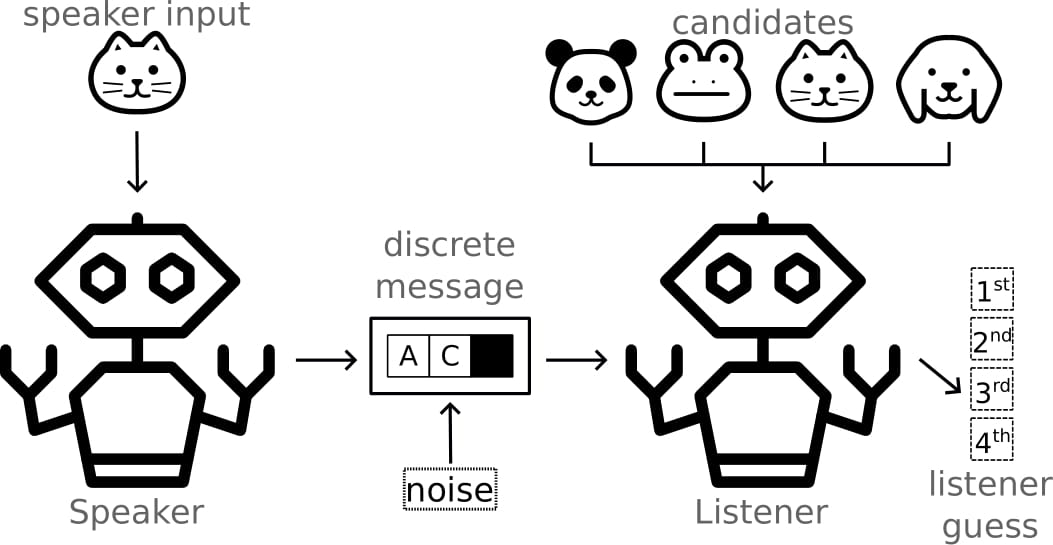

Aiming to study implicit repair mechanisms in a confined and artificial setup, we derive a new extension to the Lewis Game (LG), called the Noisy Lewis Game, or NLG. As depicted in Figure 1, NLG comprises two agents, a Speaker and a Listener. The game unfolds as follows:

- The Speaker receives a target object and describes it by sending a discrete message.

- The message goes through a noisy process where random tokens are masked out before arriving at the Listener.

- The Listener jointly receives the noisy message and a list of objects called candidates, and must discriminate which object of the candidates was the one also sent to the Speaker.

NLG is suitable to evaluate emergent communication protocols since the game’s success is completely dependent on the cooperation between agents, which happens only if the agents develop a common communication protocol.

Furthermore, both the Speaker and the Listener are developed as deep reinforcement learning agents, deployed in a RIAL setting. In such setting, agents perceive others as part of the environment, implying that no gradient flows between agents. Such a premise allows us to create fully discrete communication protocols, helping us to draw conclusions that are more consistent with natural language. Additionally, we also use real-world dataset images, such as ImageNet and CelebA as the discrimination objects for both games. This creates a more complex game than simply discriminating categorical inputs (as most previous literature does).

Finally, LG is a particular case of NLG where the communication channel has no noise. As such, the message is always given in full to the Listener.

Some Results

We highlight here two broad results regarding “how” and “why” implicit redundancy seems to naturally emerge in complex environments such as NLG.

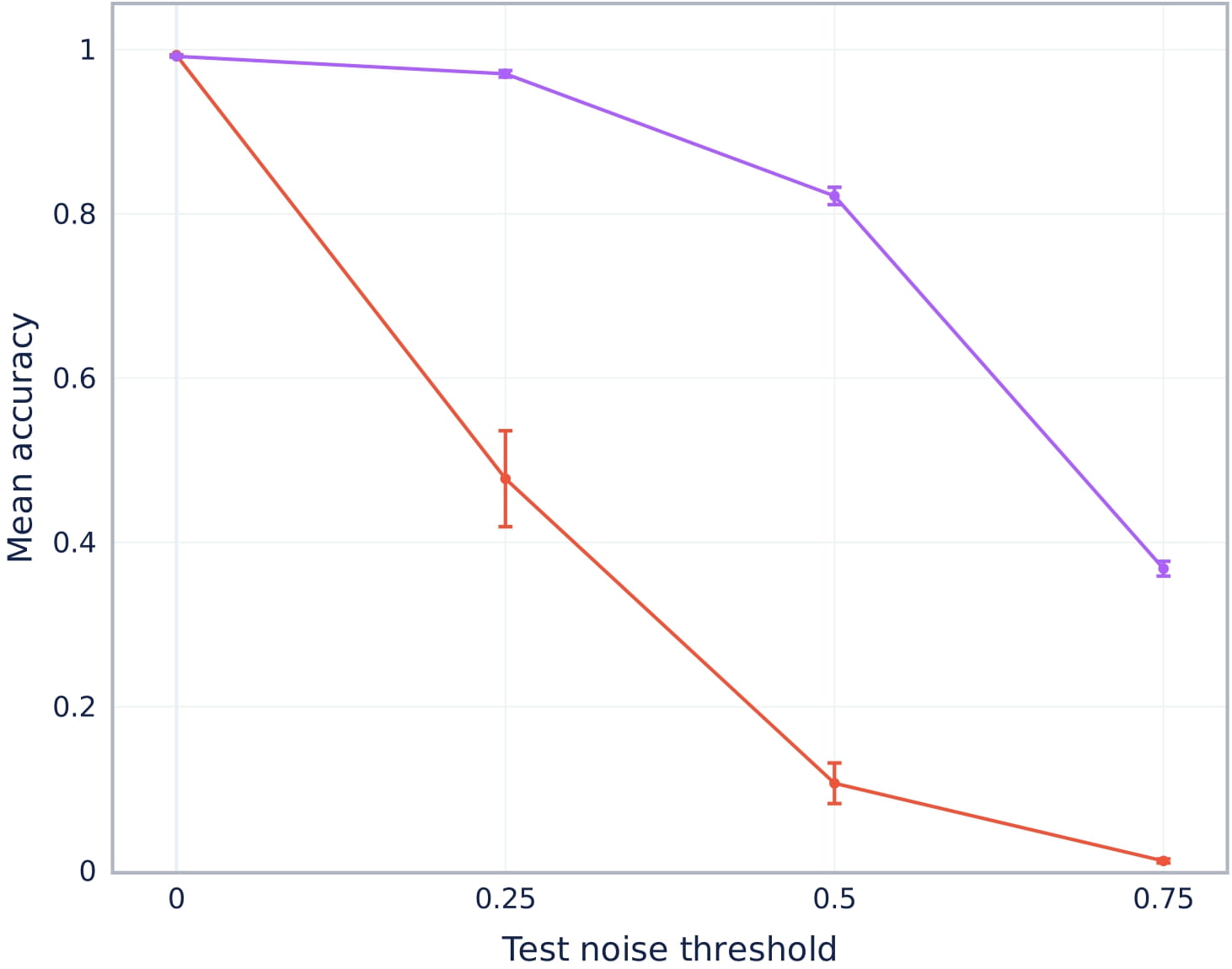

In our experiments, we observe that communication protocols emerging from NLG display a robustness property to counter the negative effect induced by the noisy communication channel. Figure 2 highlights such findings, where NLG does not lose performance against LG when there is no noise in the communication channel. Furthermore, for any positive noise level (ratio of masked tokens), NLG achieves substantially higher performance than LG.

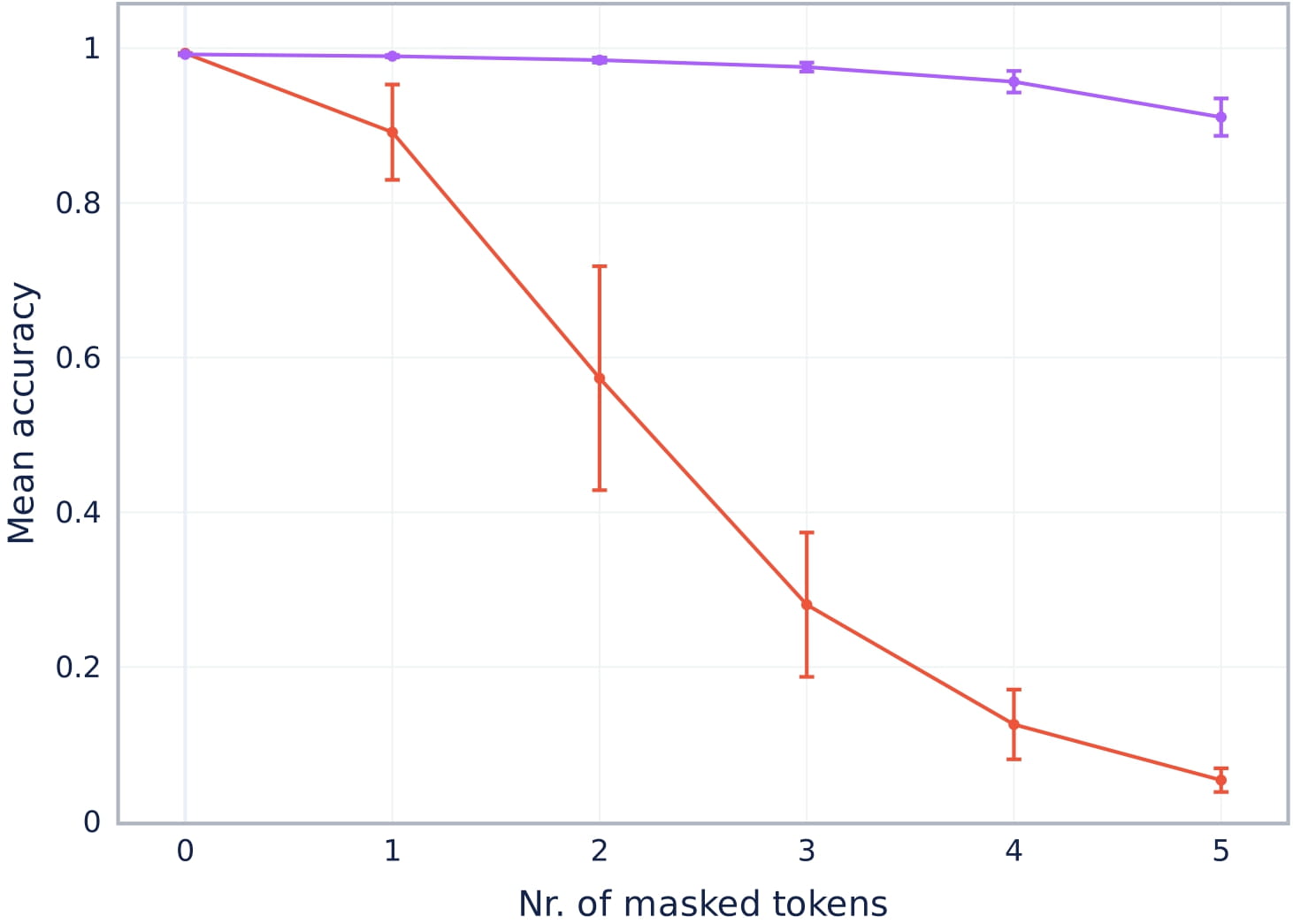

Moreover, we perform a more refined evaluation where we evaluate the performance agents get for a specific number of masked tokens. Additionally, we incrementally increase the number of masked tokens one by one (from 0 tokens to half of the message tokens masked). Furthermore, for a specific number of masked tokens, we sample multiple combinations of masks to make sure we cover a diverse range of how noise could affect the information sent. As Figure 3 depicts, NLG performance decreases at a very slow pace as the number of masked tokens increases. Plus, no matter what tokens are masked, NLG’s performance shows notably slow variance. As such, NLG implicitly encodes information redundantly to still send the necessary details to solve the game, in this case, to discriminate the correct candidate from the distractor candidates. On the other hand, the baseline (LG) gets substantially lower results even with only one masked token. Moreover, the performance obtained by different masks is substantially different, as the performance for a specific number of masked tokens has high variance. The cause for this result arises from LG giving distinct importance to different token positions in the message, which is the opposite of a redundant behavior.

Conclusion

To conclude, we show that implicit repair mechanisms naturally emerge in complex environments such as NLG. Such emergence happens because NLG has additional external pressures, making cooperation between agents harder. To continue solving the game successfully, the agents learn how to encode and send information redundantly, which is an implicit repair mechanism. This way, the communication protocol becomes completely robust to the negative effect induced by the masking of aleatoric tokens in the message.